Tuesday, November 23, 2010

Saturday, November 13, 2010

OpenXenManager

http://www.openxenmanager.com/

Download from SVN: *svn co

https://openxenmanager.svn.

*

or from the sourceforge repositrory.

To Install (Distro Agnostic):

- Download and Uncompress the source file.

- From terminal, run python frontend.py

Wednesday, November 10, 2010

Tuesday, November 2, 2010

Build Your Own Cloud Servers With Ubuntu Server 10.10

Have you been wanting to fly to the cloud, to experiment with cloud computing? Now is your chance. With this article, we will step through the process of setting up a private cloud system using Ubuntu Enterprise Cloud (UEC), which is powered by the Eucalyptus platform.

The system is made up of one cloud controller (also called a front-end server) and one or more node controllers. The cloud controller manages the cloud environment. You can install the default Ubuntu OS images or create your own to be virtualized. The node controllers are where you can run the virtual machine (VM) instances of the images.

System Requirements

At least two computers must be dedicated to this cloud for it to work:

- One for the front-end server (cloud or cluster controller) with a minimum 2GHz CPU, 2 GB of memory, DVD-ROM, 320GB of disk space, and an Ethernet network adapter

- One or more for the node controller(s) with a CPU that supports Virtualization Technology (VT) extensions, 1GB of memory, DVD-ROM, 250GB of disk space and an Ethernet network adapter

You might want to reference a list of Intel processors that include VT extensions. Optionally, you can run a utility, called SecurAble, in Windows. You can also check in Linux if a computer supports VT by seeing if "vmx" or "svm" is listed in the /proc/cpuinfo file. Run the command: egrep '(vmx|svm)' /proc/cpuinfo. Bear in mind, however, this tells you only if it's supported; the BIOS could still be set to disable it.

Preparing for the Installation

First, download the CD image for the Ubuntu Server any PC with a CD or DVD burner. Then burn the ISO image to a CD or DVD. If you want to use a DVD, make sure the computers that will be in the cloud read DVDs. If you're using Windows 7, you can open the ISO file and use the native burning utility. If you're using Windows Vista or later, you can download a third-party application like DoISO.

Before starting the installation, make sure the computers involved are setup with the peripherals they need (i.e., monitor, keyboard and mouse). Plus, make sure they're plugged into the network so they'll automatically configure their network connections.

Installing the Front-End Server

The installation of the front-end server is straightforward. To begin, simply insert the install CD, and on the boot menu select "Install Ubuntu Enterprise Cloud", and hit Enter. Configure the language and keyboard settings as needed. When prompted, configure the network settings.

When prompted for the Cloud Installation Mode, hit Enter to choose the default option, "Cluster". Then you'll have to configure the Time Zone and Partition settings. After partitioning, the installation will finally start. At the end, you'll be prompted to create a user account.

Next, you'll configure settings for proxy, automatic updates and email. Plus, you'll define a Eucalyptus Cluster name. You'll also set the IP addressing information, so users will receive dynamically assigned addresses.

Installing and Registering the Node Controller(s)

The Node installation is even easier. Again, insert the install disc, select "Install Ubuntu Enterprise Cloud" from the boot menu, and hit Enter. Configure the general settings as needed.

When prompted for the Cloud Installation Mode, the installer should automatically detect the existing cluster and preselect "Node." Just hit Enter to continue. The partitioning settings should be the last configuration needed.

Registering the Node Controller(s)

Before you can proceed, you must know the IP address of the node(s). To check from the command line:

/sbin/ifconfig |

Then, you must install the front-end server's public ssh key onto the node controller:

sudo passwd eucalyptus |

sudo -u eucalyptus ssh-copy-id -i ~eucalyptus/.ssh/id_rsa.pub eucalyptus@ |

sudo passwd -d eucalyptus |

sudo euca_conf --no-rsync --discover-nodes |

Getting and Installing User Credentials

Enter these commands on the front-end server to create a new folder, export the zipped user credentials to it, and then to unpack the files:

mkdir -p ~/.euca |

The user credentials are also available via the web-based configuration utility; however, it would take more work to download the credentials there and move them to the server.

Setting Up the EC2 API and AMI Tools

Now you must setup the EC2 API and AMI tools on your front-end server. First, source the eucarc file to set up your Eucalyptus environment by entering: ~/.euca/eucarc

For this to be done automatically when you login, enter the following command to add that command to your ~/.bashrc file: echo "[ -r ~/.euca/eucarc ] && . ~/.euca/eucarc" >> ~/.bashrc

Now to install the cloud user tools, enter: sudo apt-get install ^31vmx32^4

. ~/.euca/eucarc Accessing the Web-Based Control PanelNow you can access the web-based configuration utility. From any PC on the same network, go to the URL, https://:8443. The IP address of the cloud controller is displayed just after logging onto the front-end server. Note that that is a secure connection using HTTPS instead of just HTTP. You'll probably receive a security warning from the web browser since the server uses a self-signed certificate instead of one handled out by a known Certificate Authority (CA). Ignore the alert by adding an exception. The connection will still be secure. The default login credentials are "admin" for both the Username and Password. The first time logging in you'll be prompted to setup a new password and email. Installing imagesNow that you have the basic cloud set up, you can install images. Bring up the web-based control panel, click the Store tab, and click the Install button for the desired image. It will start downloading, and then it will automatically install, which takes a long time to complete. Running imagesBefore running an image on a node for the first time, run these commands to create a keypair for SSH: You also need to open port 22 up on the node, using the following commands:

|

Wednesday, October 27, 2010

Dynamic Bonding of Ethernet Interfaces

Synopsis

ifenslave [-acdfhuvV] [--all-interfaces] [--change-active] [--detach] [--force] [--help] [--usage] [--verbose] [--version] master slave ...

Description

The kernel must have support for bonding devices for ifenslave to be useful.

Options

-a, --all-interfaces

Show information about all interfaces.

-c, --change-active

Change active slave.

-d, --detach

Removes slave interfaces from the bonding device.

-f, --force

Force actions to be taken if one of the specified interfaces appears not to belong to an Ethernet

device.

-h, --help

Display a help message and exit.

-u, --usage

Show usage information and exit.

-v, --verbose

Print warning and debug messages.

-V, --version

Show version information and exit.

If not options are given, the default action will be to enslave interfaces.

Example

The following example shows how to setup a bonding device and enslave two real

Ethernet devices to it:

# modprobe bonding

# ifconfig bond0 192.168.0.1 netmask 255.255.0.0

# ifenslave bond0 eth0 eth1

Tuesday, October 26, 2010

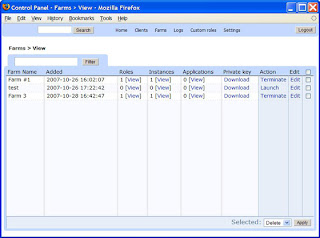

Scalr: The Auto-Scaling Open-Source Amazon EC2 Effort

Scalr is a recently open-sourced framework for managing the massive serving power of Amazon’s Elastic Computing Cloud

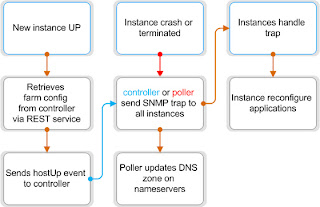

is a recently open-sourced framework for managing the massive serving power of Amazon’s Elastic Computing Cloud (EC2) service. While web services have been using EC2 for increased capacity since Fall 2006, it has never been fully “elastic” (scaling requires adding and configuring more machines when the situation arises). What Scalr promises is compelling: a “redundant, self-curing, and self-scaling” network, or a nearly self-sustainable site that could do normal traffic in the morning, and then get Buzz’d in the afternoon.

(EC2) service. While web services have been using EC2 for increased capacity since Fall 2006, it has never been fully “elastic” (scaling requires adding and configuring more machines when the situation arises). What Scalr promises is compelling: a “redundant, self-curing, and self-scaling” network, or a nearly self-sustainable site that could do normal traffic in the morning, and then get Buzz’d in the afternoon.

The Scalr framework is a series of server images, known dully in Amazon-land as Amazon Machine Images (AMI), for each of the basic website needs: an app server, a load balancer, and a database server. These AMIs come pre-built with a management suite that monitors the load and operating status of the various servers on the cloud. Scalr can increase / decrease capacity as demand fluctuates, as well as detecting and rebuilding improperly functioning instances. Scalr is also smart enough to know what type of scaling is necessary, but how well it will scale is still a fair question.

Those behind Scalr believe open-sourcing their pet project will help disrupt the established, for-pay players in the AWS management game, RightScale and WeoCeo

and WeoCeo . Intridea

. Intridea , a Ruby on Rails

, a Ruby on Rails development firm, originally developed Scalr for MediaPlug

development firm, originally developed Scalr for MediaPlug , a yet-to-launch “white label YouTube” with potentially huge (and variable) media transcoding needs. Scalr was recently featured on Amazon Web Service’s blog

, a yet-to-launch “white label YouTube” with potentially huge (and variable) media transcoding needs. Scalr was recently featured on Amazon Web Service’s blog .

.

I’d argue that Scalr makes Amazon EC2 significantly more interesting from a developer’s standpoint. EC2 is still largely used for batch-style, asynchronous jobs such as crunching large statistics or encoding video (although increasingly more are using it for their full web server setup). Amazon for their part is delivering on the ridiculously hard cloud features, last week announcing that their EC2 instances can have static IPs and can be chosen from certain data centers (should really improve the latency). But for now, monitoring and scaling an EC2 cluster is a real chore for AWS developers, so it’s good to see some abstraction.

that their EC2 instances can have static IPs and can be chosen from certain data centers (should really improve the latency). But for now, monitoring and scaling an EC2 cluster is a real chore for AWS developers, so it’s good to see some abstraction.

Ubuntu Enterprise Cloud Architecture

Overview

Ubuntu Enterprise Cloud (UEC) brings Amazon EC2-like infrastructure capabilities inside the firewall. The UEC is powered by Eucalyptus, an open source implementation for the emerging standard of the EC2 API. This solution is designed to simplify the process of building and managing an internal cloud for businesses of any size, thereby enabling companies to create their own self-service infrastructure.

As the technology is open source, it requires no licence fees or subscription for use, experimentation or deployment. Updates are provided freely and support contracts can be purchased directly from Canonical.

UEC is specifically targeted at those companies wishing to gain the benefits of self-service IT within the confines of the corporate data centre whilst avoiding either lock-in to a specific vendor, expensive re-occurring product licences or the use of non-standard tools that aren't supported by public providers.

This white paper tries to provide an understanding of the UEC internal architecture and possibilities offered by it in terms of security, networking and scalability.

http://www.ubuntu.com/system/files/UbuntuEnterpriseCloudWP-Architecture-20090820.pdf

Wednesday, October 20, 2010

Top 10 Virtualization Technology Companies

You might not require every bit and byte of programming they're composed of, but you'll rejoice at the components of their feature sets when you need them. These solutions scale from a few virtual machines that host a handful of Web sites, virtual desktops or intranet services all the way up to tens of thousands of virtual machines serving millions of Internet users. Virtualization and related cloud services account for an estimated 40 percent of all hosted services. If you don't know all the names on this list, it's time for an introduction.

1. VMware

Find a major data center anywhere in the world that doesn't use VMware, and then pat yourself on the back because you've found one of the few. VMware dominates the server virtualization market. Its domination doesn't stop with its commercial product, vSphere. VMware also dominates the desktop-level virtualization market and perhaps even the free server virtualization market with its VMware Server product. VMware remains in the dominant spot due to its innovations, strategic partnerships and rock-solid products.

2. Citrix

Citrix was once the lone wolf of application virtualization, but now it also owns the world's most-used cloud vendor software: Xen (the basis for its commercial XenServer). Amazon uses Xen for its Elastic Compute Cloud (EC2) services. So do Rackspace, Carpathia, SoftLayer and 1and1 for their cloud offerings. On the corporate side, you're in good company with Bechtel, SAP and TESCO.

3. Oracle

If Oracle's world domination of the enterprise database server market doesn't impress you, its acquisition of Sun Microsystems now makes it an impressive virtualization player. Additionally, Oracle owns an operating system (Sun Solaris), multiple virtualization software solutions (Solaris Zones, LDoms and xVM) and server hardware (SPARC). What happens when you pit an unstoppable force (Oracle) against an immovable object (the Data Center)? You get the Oracle-centered Data Center.

4. Microsoft

Microsoft came up with the only non-Linux hypervisor, Hyper-V, to compete in a tight server virtualization market that VMware currently dominates. Not easily outdone in the data center space, Microsoft offers attractive licensing for its Hyper-V product and the operating systems that live on it. For all Microsoft shops, Hyper-V is a competitive solution. And, for those who have used Microsoft's Virtual PC product, virtual machines migrate to Hyper-V quite nicely.

5. Red Hat

For the past 15 years, everyone has recognized Red Hat as an industry leader and open source champion. Hailed as the most successful open source company, Red Hat entered the world of virtualization in 2008 when it purchased Qumranet and with it, its own virtual solution: KVM and SPICE (Simple Protocol for Independent Computing Environment). Red Hat released the SPICE protocol as open source in December 2009.

6. Amazon

Amazon's Elastic Compute Cloud (EC2) is the industry standard virtualization platform. Ubuntu's Cloud Server supports seamless integration with Amazon's EC2 services.EngineYard's Ruby applicationservices leverage Amazon's cloud as well.

7. Google

When you think of Google, virtualization might not make the top of the list of things that come to mind, but its Google Apps, AppEngine and extensiveBusiness Services list demonstrates how it has embraced cloud-oriented services.

8. Virtual Bridges

Virtual Bridges is the company that invented what's now known as virtual desktop infrastructure or VDI. Its VERDE product allows companies to deploy Windows and Linux Desktops from any 32-bit or 64-bit Linux server infrastructure running kernel 2.6 or above. To learn more about this Desktop-as-a-Managed Service, download theVERDE whitepaper.

9. Proxmox

Proxmox is a free, open source server virtualization product with a unique twist: It provides two virtualization solutions. It provides a full virtualization solution withKernel-based Virtual Machine (KVM) and a container-based solution, OpenVZ.

10. Parallels

Parallels uses its open source OpenVZ project, mentioned above, for its commercial hosting product for Linux virtual private servers. High density and low cost are the two keywords you'll hear when experiencing a Parallels-based hosting solution. These are the two main reasons why the world's largest hosting companies choose Parallels. But, the innovation doesn't stop at Linux containerized virtual hosting. Parallels has also developed a containerized Windows platform to maximize the number of Windows hosts for a given amount of hardware.

Tuesday, October 19, 2010

Difference Between Ext3 and Ext4

One main reason that linux supports so many file systems because linux work on VFS (Virtual file system )layer that is a data abstraction layer between the kernel and the programs in userspace that issue file system commands.”Programs that run inside the kernel are in kernelspace,programs that don’t run inside the kernel called in userspace”.The VFS layer avoids duplication of common code between all file systems also it provides a fairly universal backward compatible method for programs to access data from almost all type of file systems.

EXT3 : Ext3 (Extended 3 file system) provides all the features of ext2,and also features journaling and backward compatibility with ext2.The backward compatibility enables you to still run kernals that are only ext2-aware with ext3 partitions.we can also use all of the ext2 file system tuning,repair and recovery tools with ext3 also you can upgrade an ext2 file system to an ext3 file system without losing any of your data.

Ext3’s journaling feature speeds up the amount of time it takes to bring the file system back to a sane state if it’s not been cleanly unmounted (that is,in the event of a power outage or a system crash). Under ext2,when a file system is uncleanly mounted ,the whole file system must be checked.This takes a long time on large file systems.On an ext3 system ,the system keeps a record of uncommitted file transactions and applies only those transactions when the system is brought back up.So a complete system check is not required and the system will come back up much faster.

A cleanly unmounted ext3 file system can be mounted and used as an ext2 file system,this capability can come in handy if you need to revert back to an older kernel that is not aware of ext3.The kernel sees the ext3 filesystem as an ext2 file system.

Ext4 : Ext4 is part of the Linux 2.6.28 kernel,Ext4 is the evolution of the most used Linux file system, Ext3. In many ways, Ext4 is a deeper improvement over Ext3 than Ext3 was over Ext2. Ext3 was mostly about adding journaling to Ext2, but Ext4 modifies important data structures of the file system such as the ones destined to store the file data. The result is a filesystem with an improved design, better performance, reliability and features.

Wednesday, October 6, 2010

Sunday, October 3, 2010

OCFS Cluster File System

OCFS stands for Oracle Cluster File System. It is a shared disk file system developed by Oracle Corporation and released under the GNU General Public License.

The first version of OCFS was developed with the main focus to accommodate oracle database files for clustered databases. Because of that it was not an POSIX compliant file system. With version 2 the POSIX features were included.

OCFS2 (version 2) was integrated into the version 2.6.16 of Linux kernel. Initially, it was marked as “experimental” (Alpha-test) code. This restriction was removed in Linux version 2.6.19. With kernel version 2.6.29 more features have been included into ocfs2 especially access control lists and quota.[2]

OCFS2 uses a distributed lock manager which resembles the OpenVMS DLM but is much simpler.

Hardware Requirement

* Shared Storage, accessible over SAN between cluster nodes.

* HBA for Fiber SAN on each node.

* Network Connectivity for hearbeat between servers.

OS Installation

Regular installation of RHEL 5.x. 64 bit with the following configuration.

1. SELinux must be disabled. 2. Time Zone - Saudi Time. (GMT +3)

Packages

1. Gnome for Graphics desktop.

2. Development libraries

3. Internet tools - GUI and text based

4. Editors - GUI and text based

Partitioning the HDD

1. 200 MB for /boot partition

2. 5 GB for /var partition

3. 5 GB for /tmp partition

4. Rest of the space to / partition.

The server should be up to date with the latest patches from RedHat Network.

Installation of OCFS2 Kernel Module and Tools

OCFS2 Kernel modules and tools can be downloaded from the Oracle web sites.

OCFS2 Kernel Module:

http://oss.oracle.com/projects/ocfs2/files/RedHat/RHEL5/x86_64/1.4.1-1/2.6.18-128.1.1.el5

http://oss.oracle.com/projects/ocfs2/files/RedHat/RHEL5/x86_64/1.4.1-1/2.6.18-128.1.10.el5/

Note that 2.6.18-128.1.1.el5 should match the current running kernel on the server. A new OCFS2 Kernel package should be downloaded and installed each time the kernel is updated to a new version.

OCFS2 Tools

OCFS2 Console

OCFS2 Tools and Console depends on several other packages which are normally available on a default RedHat Linux installation, except for VTE (A terminal emulator) package.

So in order to satisfy the dependencies of OCFS2 you have to install the vte package using

yum install vte

After completing the VTE installation start the OCFS2 installation using regular RPM installation procedure.

rpm -ivh ocfs2-2.6.18-92.128.1.1.el5-1.4.1-1.el5.x86_64.rpm

rpm -ivh ocfs2console-1.4.1-1.el5.x86_64.rpm

rpm -ivh ocfs2-tools-1.4.1-1.el5.x86_64.rpm

This will copy the necessary files to its corresponding locations.

Following are the important tools and files that are used frequently

/etc/init.d/o2cb /sbin/mkfs.ext2 /etc/ocfs2/cluster.conf (Need to create this Folder and file manually)

OCFS2 Configuration

It is assumed that the shared SAN storage is connected to the cluster nodes and is available as /dev/sdb. This document will cover installation of only two node (node1 and node2) ocfs2 cluster.

Following are the steps required to configure the cluster nodes.

Create the folder /etc/ocfs2

mkdir /etc/ocfs2

Create the cluster configuration file /etc/ocfs2/cluster.conf and add the following contents:

cluster:

node_count = 2

name = vmsanstorage

node:

ip_port = 7777

ip_address = 172.x.x.x

number = 1

name = mc1.ocfs

cluster = vmsanstorage

node:

ip_port = 7777

ip_address = 172.x.x.x

number =2

name = mc2.ocfs

cluster = vmsanstorage

Note that the:

* Node name should match the “hostname” of corresponding server.

* Node number should be unique for each member.

* Cluster name for each node should match the “name” field in “cluster:” section.

* “node_count” field in “cluster:” section should match the number of nodes.

O2CB cluster service configuration

The o2cb cluster service can be configured using:

/etc/init.d/o2cb configure (This command will show the following dialogs)

Configuring the O2CB driver

This will configure the on-boot properties of the O2CB driver.

The following questions will determine whether the driver is loaded onboot.

The current values will be shown in brackets ('[]').

Hitting without typing an answer will keep that current value.

Ctrl-C will abort.

Load O2CB driver on boot (y/n) [n]: y

Cluster stack backing O2CB [o2cb]:

Cluster to start on boot (Enter “none” to clear) [ocfs2]: ocfs2

Specify heartbeat dead threshold (>=7) [31]:

Specify network idle timeout in ms (>=5000) [30000]:

Specify network keepalive delay in ms (>=1000) [2000]:

Specify network reconnect delay in ms (>=2000) [2000]:

Writing O2CB configuration: OK

Loading filesystem “ocfs2_dlmfs”: OK

Mounting ocfs2_dlmfs filesystem at /dlm: OK

Starting O2CB cluster ocfs2: OK

Note that the driver should be loaded while booting and the “Cluster to start” should match the cluster name, in our case “ocfs2”.

As a best practice it is adviced to reboot the server after successfully completing the above configuration.

Formating and Mounting the shared file system

Before we can start using the shared filesystem, we have to format the shared device using OCFS2 filesystem.

Following command will format the filesystem with ocfs2 and will set some additional features.

mkfs.ocfs2 -T mail -L ocfs-mnt –fs-features=backup-super,sparse,unwritten -M cluster /dev/sdb

Where :

-T mail

Specify how the filesystem is going to be used, so that mkfs.ocfs2 can chose optimal filesystem parameters for that use.

“mail” option is a ppropriate for file systems which will have many meta data updates. Creates a larger journal.

-L ocfs-mnt

Sets the volume label for the filesystem. It will used instead of device named to identify the block device in /etc/fstab

–fs-features=backup-super,sparse,unwritten

Turn specific file system features on or off.

backup-super

Create backup super blocks for this volume

sparse

Enable support for sparse files. With this, OCFS2 can avoid allocating (and zeroing) data to fill holes

unwritten

Enable unwritten extents support. With this turned on, an application can request that a range of clusters be pre-allo-cated within a file.

-M cluster

Defines if the filesystem is local or clustered. Cluster is used by default.

/dev/sdb

Block device that need to be formated.

Note: The default mkfs.ocfs2 option covers only 4 node cluster. In case if you have more nodes you have to specify number of node slots using -N number-of-node-slots.

We are ready to mount the new filesystem once the format operation is completed successfully.

You may mount the new filesystem using the following command. It is assumed that the mount point (/mnt) exists already.

mount /dev/sdb /mnt

If the mount operation was successfully completed you can add the following entry to /etc/fstab for automatic mounting during the bootup process.

LABEL=ocfs-mnt /mnt ocfs2 rw,_netdev,heartbeat=local 0 0

Test the newly added fstab entry by rebooting the server. The server should mount /dev/sdb automatically to /mnt. You can verify this using ” df ” command after reboot.

Wednesday, September 29, 2010

The ipcs Command

The ipcs command can be used to obtain the status of all System V IPC objects.

ipcs -q: Show only message queues

ipcs -s: Show only semaphores

ipcs -m: Show only shared memory

ipcs –help: Additional arguments

By default, all three categories of objects are shown. Consider the following sample output of ipcs:

—— Shared Memory Segments ——– shmid owner perms bytes nattch status

—— Semaphore Arrays ——– semid owner perms nsems status

—— Message Queues ——– msqid owner perms used-bytes messages 0 root 660 5 1

Here we see a single message queue which has an identifier of ``0''. It is owned by the user root, and has octal permissions of 660, or -rw-rw–. There is one message in the queue, and that message has a total size of 5 bytes.

The ipcs command is a very powerful tool which provides a peek into the kernel's storage mechanisms for IPC objects. Learn it, use it, revere it.

To remove objects below are the commands:

for i in `ipcs -m | grep zabbix | awk '{print $2}'`; do ipcrm -m $i; done → Shared Memory Segments

for i in `ipcs -s | grep zabbix | awk '{print $2}'`; do ipcrm -s $i; done → Semaphore Arrays

for i in `ipcs -q | grep zabbix | awk '{print $2}'`; do ipcrm -q $i; done → Message Queues

For Eg:

for i in `ipcs -m | grep zabbix | awk '{print $2}'`; do ipcrm -m $i; done

for i in `ipcs -s | grep zabbix | awk '{print $2}'`; do ipcrm -s $i; done

for i in `ipcs -q | grep zabbix | awk '{print $2}'`; do ipcrm -q $i; done

CentOS vs Ubuntu

Hi All

A few weeks ago, I had to setup a new production LAMP server to host a few of our client’s sites, medium size eCommerce websites. I wanted to share my experience as I came across the three big (and free) Linux distributions while I evaluated and setup the machines. I have previously setup Ubuntu Server 8.04 for our development and staging environment while researching the related family of North American Linux distributions: RedHat, Fedora and CentOS.

I’ll start with the positive: in the last few years Linux distributions in general have become main stream OS and most of the installation process is user friendly. Almost each distro offers the ’server’ edition of the OS which comes mostly configured with what a production LAMP server needs to have installed already. The installation packages are clearly labeled for i386 or x86_64 bit, and most even offer a net install which in CentOS case only requires downloading about 8.3MB of ISO file and the rest is done on the fly.

Now, let’s delve into the differences that affected my decisions, labeled according to key points and in order of importance to all of us:

Setup Efficiency: ROI(Return of Investment) and Learning Curve

I had the experience for setting up both Ubuntu server and CentOS server editions on two different machines. Both machines had similar configurations of two 250GB harddrives mirrored in software Raid1. The rest of the hardware setup is pretty much the standard Intel based processors. Both Ubuntu and CentOS had no problem in recognizing all the hardware on the machines and the setup process went pretty smoothly. The differences began showing after the initial setup: while CentOS shows an additional very helpful setup (NIC assignments, firewall, and services setup) Ubuntu had no such thing and showed the login prompt right after boot. What I found surprising is that Ubuntu required additional steps in order to download and install SSH using aptitude - if one chooses a server edition, shouldn’t it be setup for you by default?

Although aptitude is a great package management software, I have found that the server version of Ubuntu is just not mature enough or simply chooses the minimalistic approach which doesn’t it my understanding of a server distro. Many of the tasks that were performed by CentOS by default or had options for that during the install were missing in Ubuntu. At the end of the day, setting up Ubuntu took days while CentOS took hours. Did I say ROI? CentOS is the clear winner.

Package Management Systems

Ubuntu prides itself on apt-get and aptitude which builds itself on top of the debian package management system while CentOS, Fedora and RedHat, use the rpm and yum package management systems. After using all systems I can clearly say that I favor the yum and rpm package management systems.

First, with apt-get/apt-cache/aptitude I had to constantly refer back to the documentation on Ubuntu’s site and I still cannot remember which one do I use for searching, installing, upgrading, describing, or removing packages - do we really need the separation? With both yum and rpm simply provide a separate option and you are good to go, all the information is flowing into the terminal and it took me only one glance of ‘man yum’ to understand what and where.

Second, in the particular case of apache, vhosts, and extensinos, aptitude allows flexibility at the price of re-arranging the apache.conf and vhosts.conf into a collection of files and folders. Yum does a similar thing as well, however I still found yum’s method to keep the original httpd.conf mostly intact which allowed my familiarity with the basic apache configuration skill to take over and finalize install in no time. In my opinion, the deviation from the standard has no benefit whatsoever. The price of flexibility comes over familiarity but yet yum had the upper hand and ease of use.

Third, setting up a package that requires dependencies is equally good in both systems: they both do a very good job of finding the dependencies, looking their download sources, installing and setting it all up. However, I did find that yum had the best reporting system and after it gathered all the information it showed a useful status report while asking permission to proceed - this is valuable for sys admins and it does save time. Once more, yum feels like a more mature package management system.

Production OS? Stability vs Cutting Edge

Here is where I wanted to introduce a bit of the feeling after setting it up. At the end of the day, when all is setup and configured, the feeling from the CentOS system was much more secure and I knew exactly what is installed and what is not. Conversely, Ubuntu server did not give me that worm fuzzy feeling that ‘all is good’ and if I needed to make a change, I would have to refer to documentation first before I touch the server.

If we see in datacenter's Linux Admin who manage production linux clusters on a regular basis, they all point to CentOS or RedHat due to stability and performance record. In other words, you won’t get the latest cutting edge packages like Ubuntu or Fedora - but it is guaranteed to be much less flawed.

Conclusion

The bottom line is that distro preference is a personal decision. Personal to the individual who administers the systems and personal to the organization. We’ve chosen CentOS over Ubuntu, Fedora, and RedHat. The only option I see that might change is adopting RedHat due to the technical support that is offered for a fee. Hands down, CentOS provided the fastest configuration time, lowest learning curve, better ROI, superior package management system, and a good fuzzy feeling of stability.

Thanks to CentOS, we can get back to our main passion: Web Development…

Reliance Net Connect on Ubuntu 9.04 by using ZTE MG880 Modem (without wvdial)

Hi All

If you google around for configuring your reliance USB modem on ubuntu 9.04 then almost all links will lead to wvdial configuration. But there

is also another way of doing it with out touching wvdial is by using pppconfig.

To avoid confusion and errors please copy and paste commands.Here we go...

1. Firstly we will have to find out modem vendor id and product id. Use the following command to find it.

sudo lsusb -v

You can see output like this...

Bus 005 Device 007: ID 19d2:fffd

Device Descriptor:

bLength 18

bDescriptorType 1

bcdUSB 1.01

bDeviceClass 0 (Defined at Interface level)

bDeviceSubClass 0

bDeviceProtocol 0

bMaxPacketSize0 16

idVendor 0x19d2

idProduct 0xfffd

bcdDevice 0.00

iManufacturer 1 ZTE, Incorporated

iProduct 2 ZTE CDMA Tech

iSerial 3 Serial Number

........

Note down id Vendor and id Product some where.

2. Loading kernal module with vendor id and product id.

sudo gedit /boot/grub/grub.cfg --->> for Ubuntu 9.04

In the grub.cfg file add the following lines at the end of kernal line (Not in the next line)

usbserial.vendor=0x19d2 usbserial.product=0xfffd (Replace the the values with your's)

### BEGIN /etc/grub.d/10_linux ### set root=(hd0,3)

search --fs-uuid --set 126317bd-2dd9-4016-8e65-df85fda33a34

menuentry "Ubuntu, linux 2.6.28-11-generic" {

linux /boot/vmlinuz-2.6.28-11-generic root=UUID=126317bd-2dd9-4016-8e65-df85fda33a34 ro quiet splash usbserial.vendor=0x19d2 usbserial.product=0xfffd

initrd /boot/initrd.img-2.6.28-11-generic

}

menuentry "Ubuntu, linux 2.6.28-11-generic (single-user mode)" {

linux /boot/vmlinuz-2.6.28-11-generic root=UUID=126317bd-2dd9-4016-8e65-df85fda33a34 ro single

initrd /boot/initrd.img-2.6.28-11-generic

}

3. Reboot/Restart your computer.

4. Close all terminal. Open new terminal and type

sudo pppconfigin the new (below) window select Create and give ok...

Type provider name (say reliance) and give ok

Select Dynamic and give ok

Select PAP and give ok

Enter your Reliance Number and give ok

Password is same as your Reliance Number, enter it and give ok

Do not change Modem Speed

Select Tone and give ok

Enter #777 and give ok

Enter yes

Select Manual and give ok

Give your modem port. In my (most of the) case it is /dev/ttyUSB0

Now all set. Ensure Everything is fine. Select Finished then ok

Finally in the last wind select Quit and give ok

Now you have configured your modem successfully and all set to connect.

5. To establish internet connection type the following command in terminal...

sudo pon reliance

6. Ensuring connection is established use this command...

ifconfig

Now you should see entry like this...

ppp0 Link encap:Point-to-Point Protocol

inet addr:123.239.41.80 P-t-P:220.224.134.12 Mask:255.255.255.255

UP POINTOPOINT RUNNING NOARP MULTICAST MTU:1500 Metric:1

RX packets:3429 errors:23 dropped:0 overruns:0 frame:0

TX packets:3683 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:3

RX bytes:2309338 (2.3 MB) TX bytes:731534 (731.5 KB)

Once the connection is established you can close the terminal.

7. To disconnect...

sudo poff reliance

Extra

In case if you want to monitor internet speed then install pppstatus package (sudo apt-get install pppstatus) and type sudo pppstatus in the terminal.

That's all I have to do get Internet running on my laptop